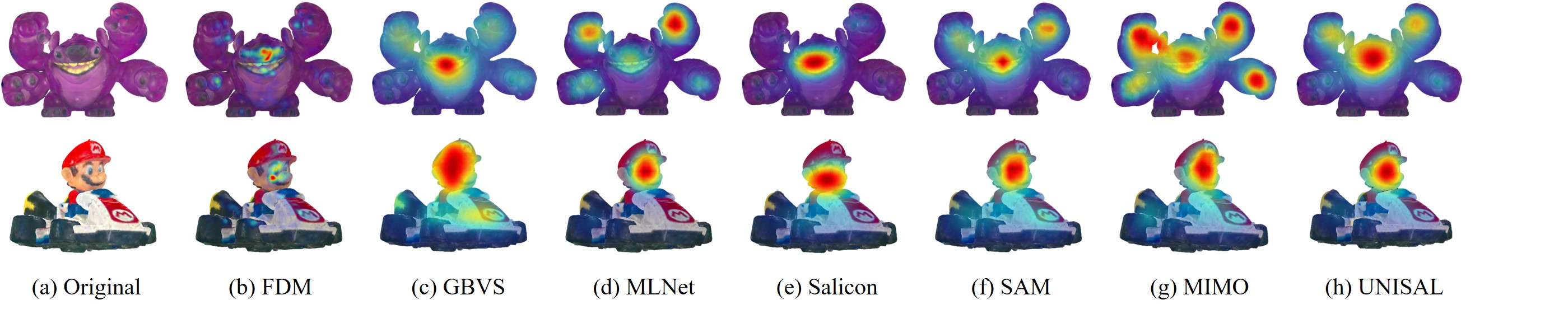

Original colored 3D models

- 17 high quality 3D colored mesh.

Fixations - 204 files (MAT format), involving 17 3D colored models and 12 viewing orientations.

Code Coming soon.

Acknowledgements

We are deeply grateful to Anass Nouri, Christophe Charrier and Olivier Lézoray for

providing high-quality 3D colored models. Moreover, we would like to express our sincere appreciation to all subjects for their active involvement in the experiment.

This work was supported in part by the Natural Science

Foundation of Hubei Province, China under Grant 2022CFB984, in part by the

China Postdoctoral Science Foundation under Grant 2022M713503, in part by

the National Natural Science Foundation of China under Grant 62036005, and in

part by the Fundamental Research Funds for the Central Universities, Zhongnan

University of Economics and Law.